Machine learning explained with gifs: style transfer

Tue, May 29, 2018About style transfer

Pioneered in 2015, style transfer is a concept that uses transfers the style of a painting to an existing photography, using neural networks. The original paper is A Neural Algorithm of Artistic Style by Leon A. Gatys, Alexander S. Ecker, and Matthias Bethge.

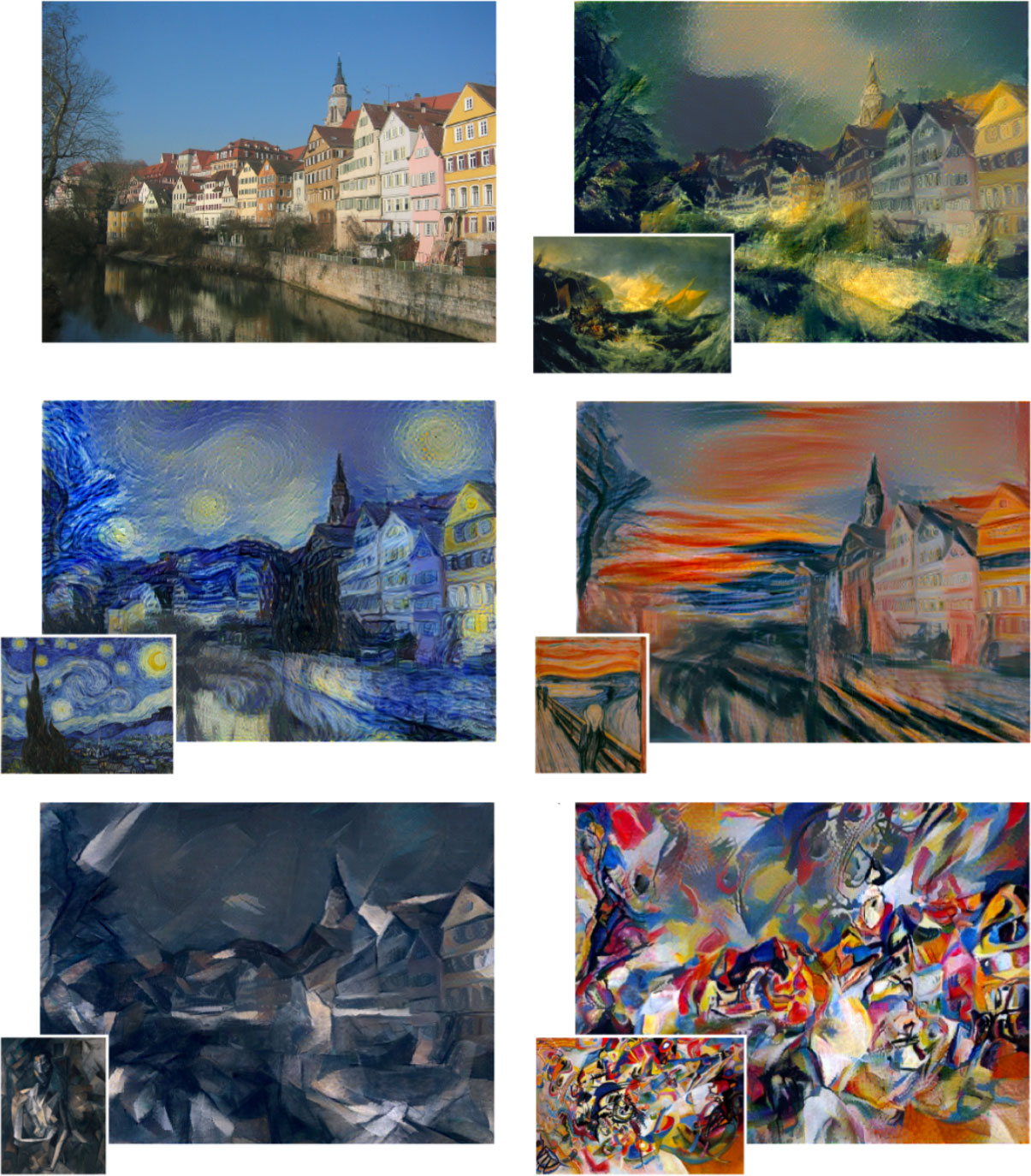

Here are a few examples taken from it:

Style transfer example from the original paper

How it works

This gif is meant to give you a rough idea on how style transfer works in the orignal paper:

Style transfer explained with a gif (click to enlarge)

Although I tried to make the gif self-explanatory, here are a few more details:

- “Filtered image” means the output of a truncated VGG16 network. This means that we run the image through VGG16 but we stop at some layer. Depending on the goal, we stop at different layers.

- Gram matrix: you’ll find an explanation below The general idea is that if coefficient (n1, n2) is high, it means that filters n1 and n2 activate for the same pixels.

- In practice there is a bit more than 5 steps to optimize the random pixels

The paper is really easy to read, I really recommend having a look

Bonus: how to calculate a Gram Matrix

(This explanation comes from Alexander Jung’s summary of the paper)

- Take the activations of a layer. That layer will contain some convolution filters (e.g. 128), each one having its own activations.

- Convert each filter’s activations to a (1-dimensional) vector.

- Pick all pairs of filters. Calculate the scalar product of both filter’s vectors.

- Add the scalar product result as an entry to a matrix of size #filters x #filters (e.g. 128x128).

- Repeat that for every pair to get the Gram Matrix.

- The Gram Matrix roughly represents the texture of the image.

More ressources

Style transfer today

Since the original paper, style transfer improved a lot, both in speed and quality. Here’s an example of what you can do with the latest paper: